7 Expectation and Concentration

7.1 Concentration inequalities

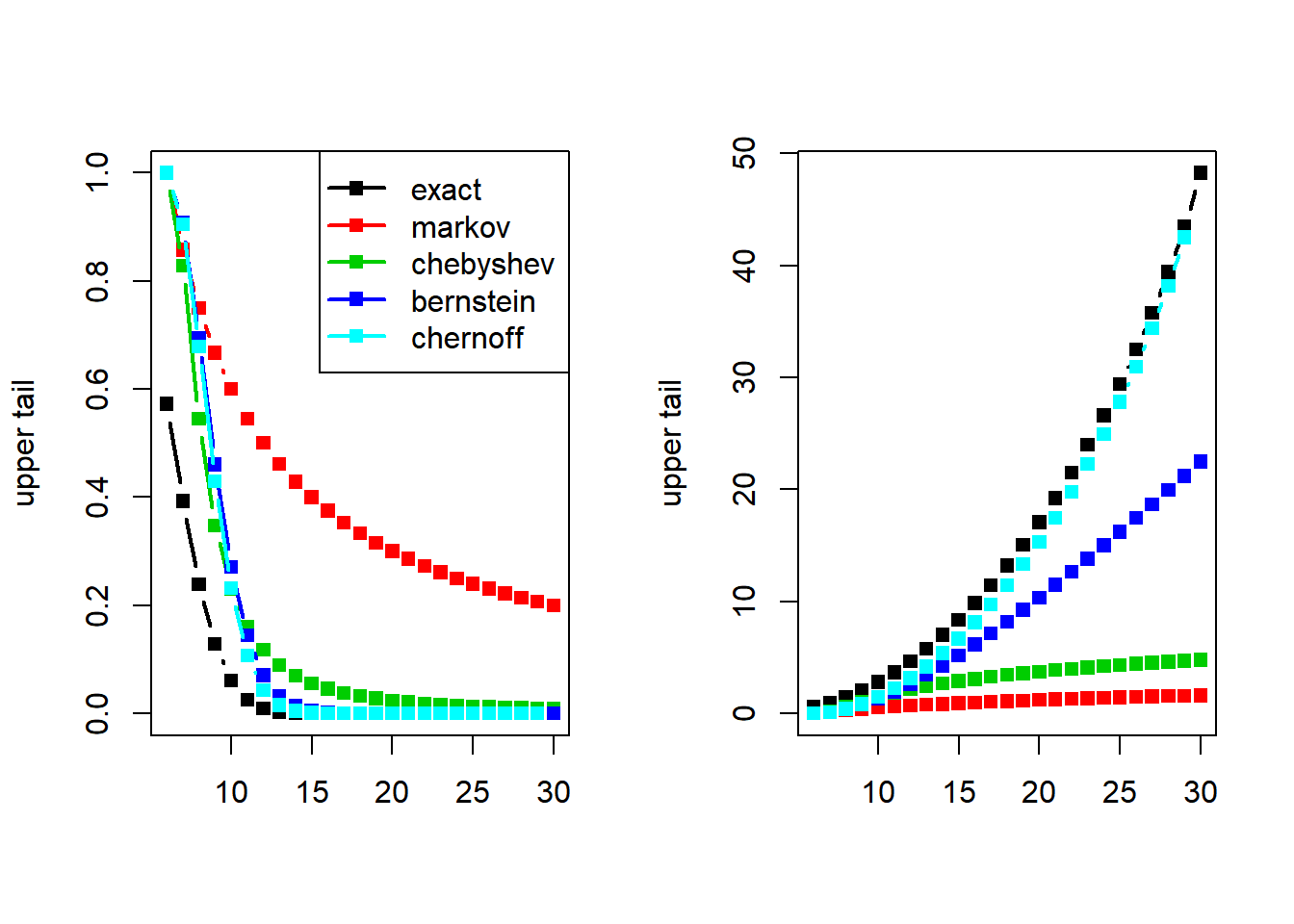

Let us compare the accuracy of some of the main concentration inequalities for the binomial distribution. We consider \(Y\) binomial with parameters \((n, p)\) specified below, and compute the bounds given by Markov, Chebyshev, Bernstein, and Chernoff.

Exact upper tail:

Markov’s upper bound:

Chebyshev’s upper bound:

Bernstein’s upper bound:

Chernoff’s upper bound:

b = y/n

chernoff = exp(- n*(b*log(b/p) + (1-b)*log((1-b)/(1-p))))

par(mfrow = c(1, 2))

matplot(y, cbind(exact, markov, chebyshev, bernstein, chernoff), type = "b", lty = 1, lwd = 2, pch = 15, xlab = "", ylab = "upper tail")

legend("topright", legend = c("exact", "markov", "chebyshev", "bernstein", "chernoff"), , lty = 1, lwd = 2, pch = 15, col = 1:5)

matplot(y, -log(cbind(exact, markov, chebyshev, bernstein, chernoff)), type = "b", lty = 1, lwd = 2, pch = 15, xlab = "", ylab = "upper tail") # applying a log transformation to zoom in on the tails